AI in cybersecurity: Dirty data is holding back big potential

Security and IT leaders everywhere are betting big on AI and automation to transform how they manage vulnerabilities. But there’s a disconnect in the execution of these tools that’s hard to ignore.

In our report, The Trust Factor: How Trusted Data Drives Smarter Vulnerability and Exposure Management, we surveyed 500 security and IT leaders. The findings reveal what many already suspect: readiness and confidence don’t always align with reality.

90% of leaders told us they feel confident their organizations can respond to a vulnerability or exposure. Yet only 25% say they fully trust the data in their own security tools. |

And that’s the catch: the algorithms powering AI are only as good as the data feeding them. When that data is inconsistent, incomplete, or outdated, the promise of AI quickly falls apart.

Where AI is already making its mark

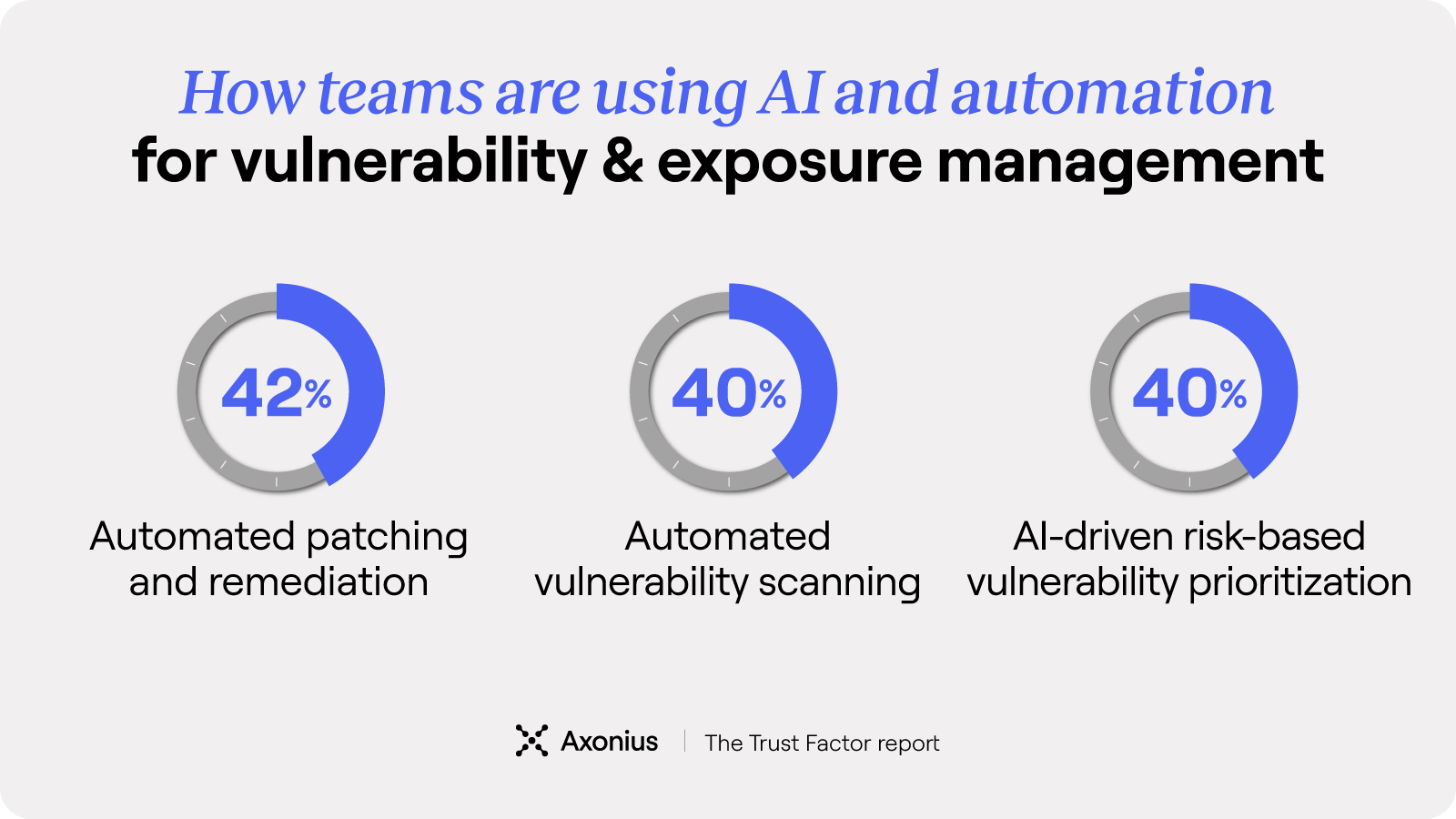

When we asked security and IT leaders where they’re applying these tools, three clear use cases rose to the top:

42% are using AI for automated patching and remediation

40% are applying it to automated vulnerability scanning

40% are turning to AI-driven, risk-based prioritization

The plans and aspirations are sound. But the value of these initiatives depends on whether the data feeding them can be trusted. And right now, trust is lacking.

Out of our surveyed leaders, 36% say their data is inconsistent, 34% say it’s incomplete, and 33% say it’s inaccurate. |

When the very foundation of your AI and automation strategy looks like this, it’s easy to see why outcomes don’t match expectations.

What’s holding back AI in cybersecurity

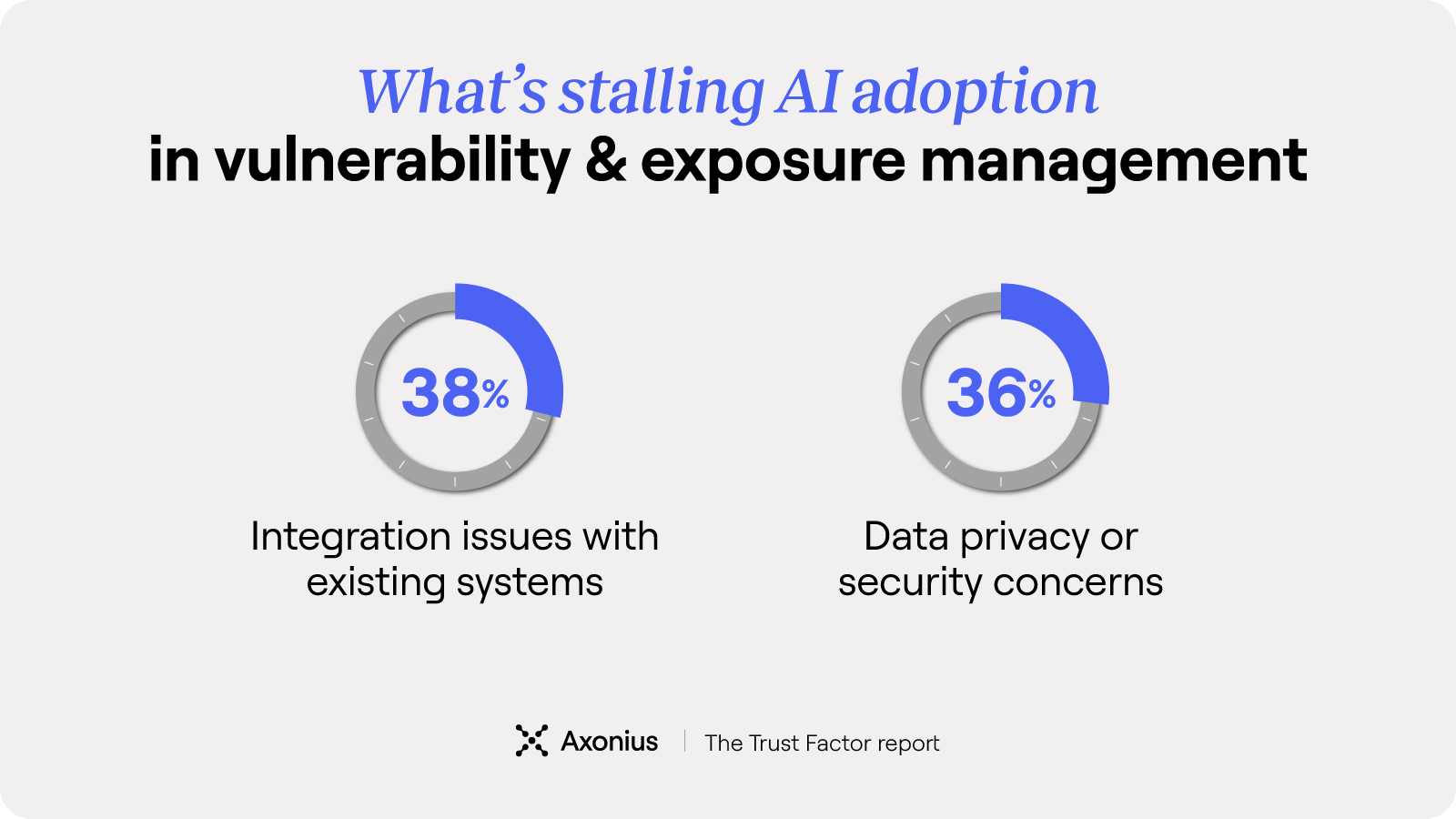

AI holds enormous potential, but scaling it requires seamless integration with the rest of your security stack. That’s where many teams hit a wall.

Nearly 38% of leaders say integrating AI into existing systems and tools is their biggest challenge. Meanwhile, 36% point to data privacy or security concerns.

It’s one thing to bring AI into the mix. It’s another to ensure sensitive data isn’t being exposed, mishandled, or siloed in the process.

You can’t automate what you don’t trust

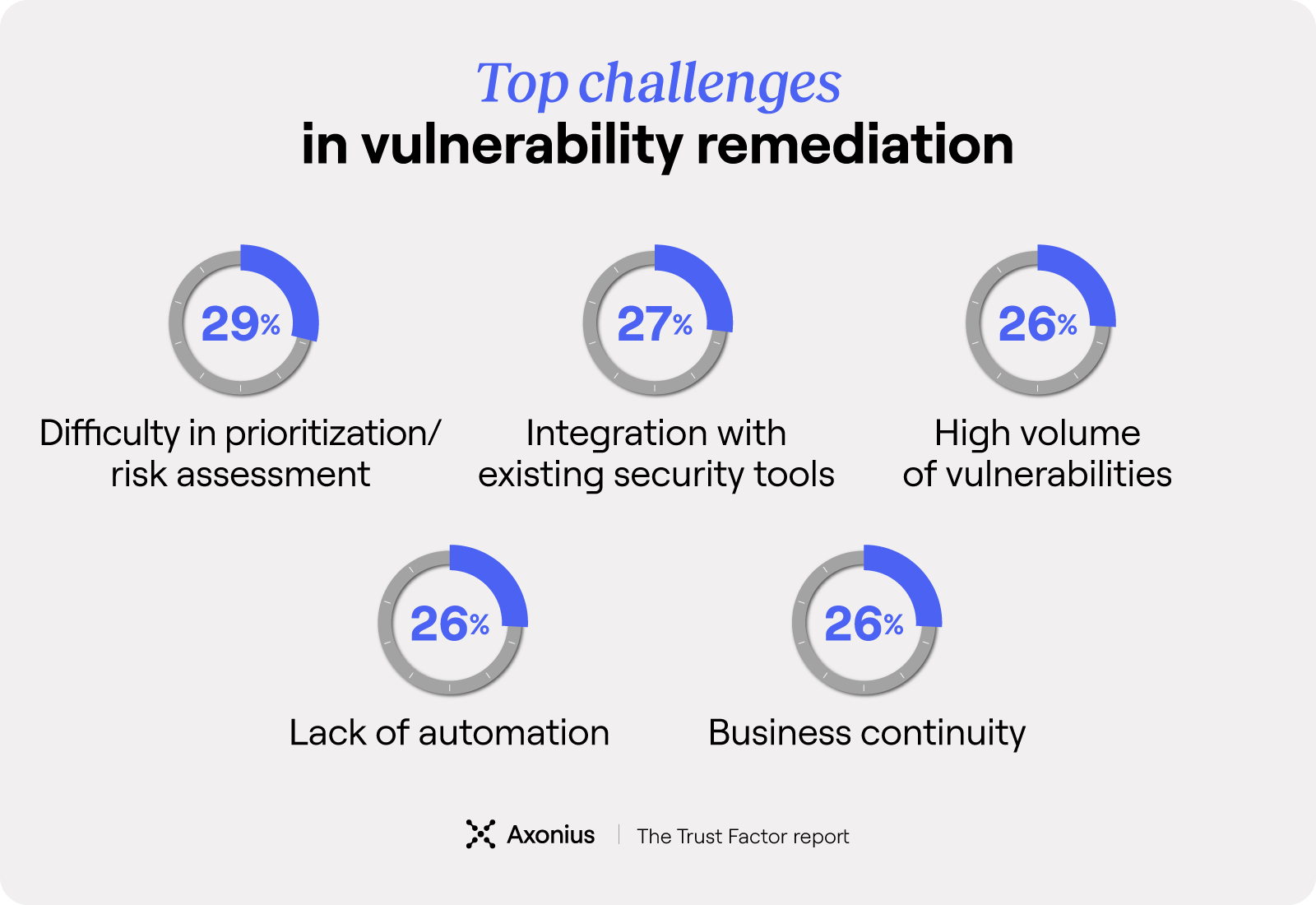

By and large, security and IT experts are confident that AI and automation will ultimately transform how their organizations detect and mitigate risks. But today, their biggest remediation challenges remain prioritization (29%), integration (27%), and the sheer volume of vulnerabilities (26%).

AI should help tackle exactly these problems. But when the inputs are shaky, the outputs become risky at scale. Automating on top of fragmented or inaccurate data can create bigger problems. Flawed insights drive flawed responses, executed at machine speed and scale.

Lay the right data foundations for AI

AI and automation investments can’t deliver on their full potential unless they’re fed with rock-solid, relevant data that is trustworthy, complete, connected, and fully accessible. The tools are ready now. But to realize their promise, you first have to address the data problem.

Grab the full Trust Factor report for deeper insights and actionable takeaways to prepare your data foundations for AI.

Categories

- Threats & Vulnerabilities

Get Started

Discover what’s achievable with a product demo, or talk to an Axonius representative.